To facilitate safe information transfer, the NVIDIA driver, working in the CPU TEE, makes use of an encrypted "bounce buffer" located in shared procedure memory. This buffer acts as an middleman, making sure all interaction between the CPU and GPU, like command buffers and CUDA kernels, is encrypted and therefore mitigating possible in-band assaults.

Our suggestion for AI regulation and laws is straightforward: keep an eye on your regulatory environment, and be able to pivot your project scope if expected.

consumer devices encrypt requests only for a subset of PCC nodes, as opposed to the PCC company as a whole. When requested by a consumer unit, the load balancer returns a subset of PCC nodes which are probably to generally be able to approach the person’s inference request — however, as being the load balancer has no pinpointing information regarding the consumer or system for which it’s deciding on nodes, it simply cannot bias the established for specific people.

consumer information isn't available to Apple — even to staff members with administrative usage of the production assistance or hardware.

The University supports responsible experimentation with Generative AI tools, but there are important criteria to bear in mind when applying these tools, together with information security and facts privateness, compliance, copyright, and academic integrity.

How would you maintain your delicate info or proprietary device Understanding (ML) algorithms safe with numerous Digital equipment (VMs) or containers managing on an individual server?

particular data may very well be A part of the product when it’s trained, submitted on the AI system being an input, or made by the AI read more technique as an output. Personal data from inputs and outputs can be utilized to help you make the model additional exact eventually through retraining.

Organizations of all measurements encounter various problems now when it comes to AI. in accordance with the latest ML Insider study, respondents rated compliance and privateness as the greatest issues when employing massive language products (LLMs) into their businesses.

In parallel, the field demands to carry on innovating to meet the security requires of tomorrow. swift AI transformation has introduced the attention of enterprises and governments to the necessity for safeguarding the quite information sets utilized to educate AI products as well as their confidentiality. Concurrently and adhering to the U.

that can help tackle some crucial pitfalls related to Scope 1 programs, prioritize the next things to consider:

Regulation and legislation usually choose time for you to formulate and build; even so, existing rules presently utilize to generative AI, as well as other laws on AI are evolving to include generative AI. Your lawful counsel should assist hold you updated on these variations. whenever you Create your personal application, you have to be aware about new laws and regulation that may be in draft kind (including the EU AI Act) and irrespective of whether it can have an effect on you, Along with the numerous Other folks that might exist already in destinations wherever You use, given that they could limit or simply prohibit your software, according to the possibility the applying poses.

Generative AI has made it easier for malicious actors to produce innovative phishing email messages and “deepfakes” (i.e., movie or audio intended to convincingly mimic someone’s voice or Bodily look without their consent) in a far larger scale. continue on to adhere to protection best techniques and report suspicious messages to phishing@harvard.edu.

And this data will have to not be retained, including through logging or for debugging, once the reaction is returned towards the consumer. To paraphrase, we would like a strong kind of stateless details processing the place own information leaves no trace in the PCC method.

you may perhaps will need to point a preference at account generation time, decide into a particular sort of processing When you have created your account, or connect with certain regional endpoints to accessibility their services.

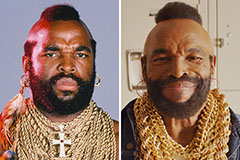

Mr. T Then & Now!

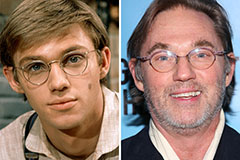

Mr. T Then & Now! Robbie Rist Then & Now!

Robbie Rist Then & Now! Richard Thomas Then & Now!

Richard Thomas Then & Now! Batista Then & Now!

Batista Then & Now! Katey Sagal Then & Now!

Katey Sagal Then & Now!